barış serim / hci research

I am an HCI researcher with a PhD. from Aalto University. My research is about using visual attention information for human-computer interaction and models of mind in HCI.

research highlights / visit my scholar page for a more complete list

| |

| We define the implications of an integrated view of cognition for brain–computer interface design and evaluation. Embodiment can guide research by 1) providing body-grounded explanations for BCI performance, 2) proposing evaluation considerations that are neglected in modular views of cognition, and 3) through the direct transfer of its design insights to BCIs. We finally reflect on HCI's understanding of embodiment and identify the neural dimension of embodiment as hitherto overlooked. Human–Computer Interaction link pdf |

| |

| This thesis contributes design knowledge about adapting the interaction based on users' level of visual monitoring during input through a series of prototypes that have been developed for different use cases. I first distinguish between different implications of visual attention information for interface design, and identify visual attention as a measure of user awareness as the main focus of the work presented in this thesis. Lack of visual attention during input decreases users' awareness of the environment. In these cases, the system can adapt the interaction through a number of methods such as handling input more flexibly or remediating the lack of visual attention through novel visual feedback techniques. Aalto University link pdf |

| |

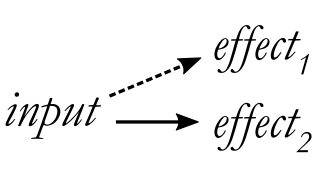

| The term implicit interaction is often used to denote interactions that differ from traditional purposeful and attention demanding ways of interacting with computers. However, there is a lack of agreement about the term's precise meaning. This paper develops implicit interaction further as an analytic concept, identifies the methodological challenges related to HCI's particular design orientation and demonstrates how they can be addressed with greater precision by using an updated, intentionality-based definition that specifies an input-effect relationship as the entity of implicit. (CHI'19) doi pdf |

| |

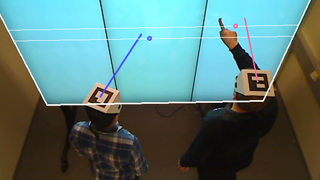

| During collaboration, individual users' capacity to maintain awareness, avoid duplicate work and prevent conflicts depends on the extent to which they are able to monitor the workspace. We propose managing access by taking the visual attention of collaborators into account. For example, actions that require consensus can be limited to collaborators' joint attention, editing another user's personal document can require her visual supervision and private information can become unavailable when another user is looking. We prototyped visual attention-based access for 3 collaboration scenarios on a large vertical display using head orientation input as a proxy for attention. (IMWUT'18) doi pdf video |

| |

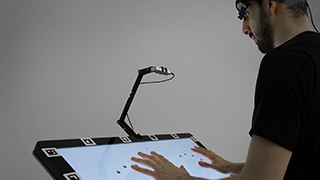

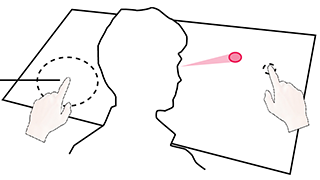

| We explore the combination of above-surface sensing with eye tracking to facilitate concurrent interaction with multiple regions on touch screens. Conventional touch input relies on positional accuracy, thereby requiring tight visual monitoring of one's own motor action. In contrast, above-surface sensing and eye tracking provides information about how user's hands and gaze are distributed across the interface. In these situations, we facilitate interaction by 1) showing the visual feedback of the hand hover near user's gaze point and 2) decrease the requisite of positional accuracy by employing gestural information. (DIS'17) doi pdf video |

| |

| We propose using eye tracking to support interface use with decreased reliance on visual guidance. While the design of most graphical user interfaces take visual guidance during manual input for granted, eye tracking allows distinguishing between the cases when the manual input is conducted with or without guidance. We conceptualize the latter cases as input with uncertainty that require separate handling. We describe the design space of input handling by utilizing input resources available to the system, possible actions the system can realize and various feedback techniques for informing the user. (CHI'16) doi pdf video |

teaching

I lectured on interactive visualization, interaction techniques, user-centered design and prototying techniques at various bachelor and master level HCI courses at the University of Helsinki.

community

I am a frequent reviewer for ACM CHI (excellent reviewer for 2017), MobileHCI, DIS,, ICMI and ISS conferences. I was a PC for CHI'18 Late Breaking Work and ICMI.

stuff that I find relevant

Scientific publishing and peer review. I agree with others that the current system of publishing is long due for a change and we will see developments towards new models of peer review and interactivity.

contact

I am based in Helsinki and open to collaborations. Feel free to contact me through serim(DOT)baris(AT)gmail(DOT)com

© Barış Serim